MCP(Model Context Protocol)

https://modelcontextprotocol.io/introduction

快速搭建

服务端

server.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Math")

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

@mcp.tool()

def multiply(a: int, b: int) -> int:

"""Multiply two numbers"""

return a * b

if __name__ == "__main__":

mcp.run(transport="stdio")

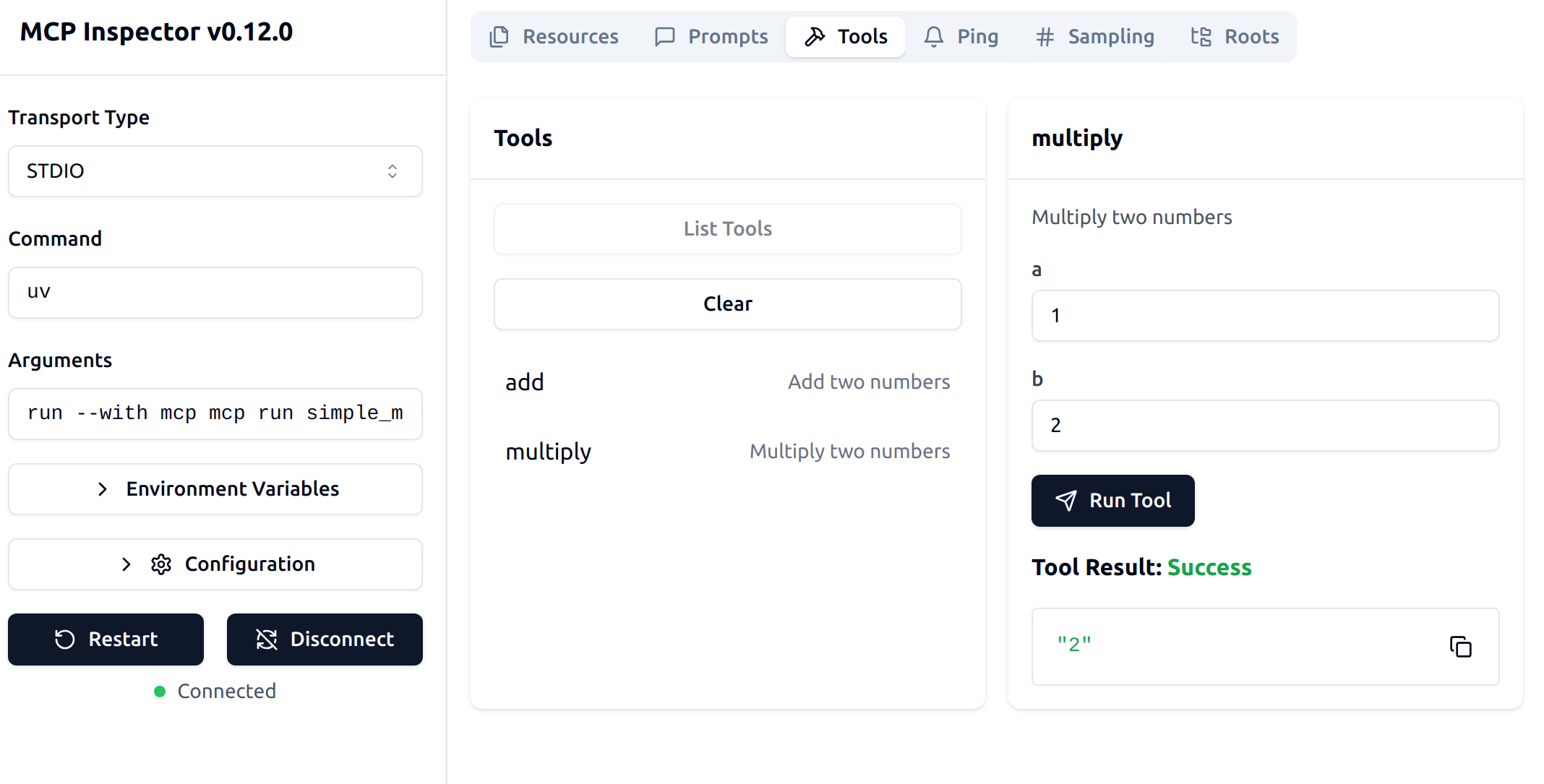

可视化server(需要安装npx,我这里装过了,开了代理下的包@modelcontextprotocol/inspector)

mcp dev server.py

客户端

我这里用的langchain框架langchain_mcp_adapters

client.py

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from langchain_mcp_adapters.tools import load_mcp_tools

from langgraph.prebuilt import create_react_agent

from model import llm

server_params = StdioServerParameters(

command="python",

# Make sure to update to the full absolute path to your math_server.py file

args=["simple_mcp_server.py"],

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

# Initialize the connection

await session.initialize()

# Get tools

tools = await load_mcp_tools(session)

print(tools)

# Create and run the agent

agent = create_react_agent(llm, tools)

agent_response = await agent.ainvoke({"messages": "what's (3 + 5) x 12?"})

print(agent_response)

if __name__ == "__main__":

import asyncio

asyncio.run(run())

model.py

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

api_key='sk_xxxx',

model='deepseek/deepseek-v3-0324',

base_url='https://xxx.com/v3/openai'

)